A Three-hour Interview With Ji Yichao, Chief Scientist At Manus... (2026)

(Full Video [https://www.youtube.com/watch?v=UqMtkgQe-kI])

This three-hour interview with Ji Yichao, Chief Scientist at Manus (later acquired by Meta), is truly a must-watch for many in the AI industry.

I'll gradually add my key takeaways in the comments. For now, here's a quick rundown of what I found particularly noteworthy and worth sharing.

As a serial AI entrepreneur, Ji Yichao shares insights from his decade of experience building AI startups. He discusses the evolution from Tokenize to LSTM to Transformer applications, and his two experiences building AI browsers.

He then delves into why Manus succeeded, highlighting how they solved problems that Cloud Providers and Model Providers couldn't – specifically, a robust tool library that allows LLMs to autonomously plan tasks.

He also likens AI Agents to manufacturing due to the extensive optimization required. Regarding Manus's product planning and direction, he believes the right approach involves "deciding what not to do."

Many technical concepts are touched upon very briefly but are incredibly deep. I'll detail them in the comments.

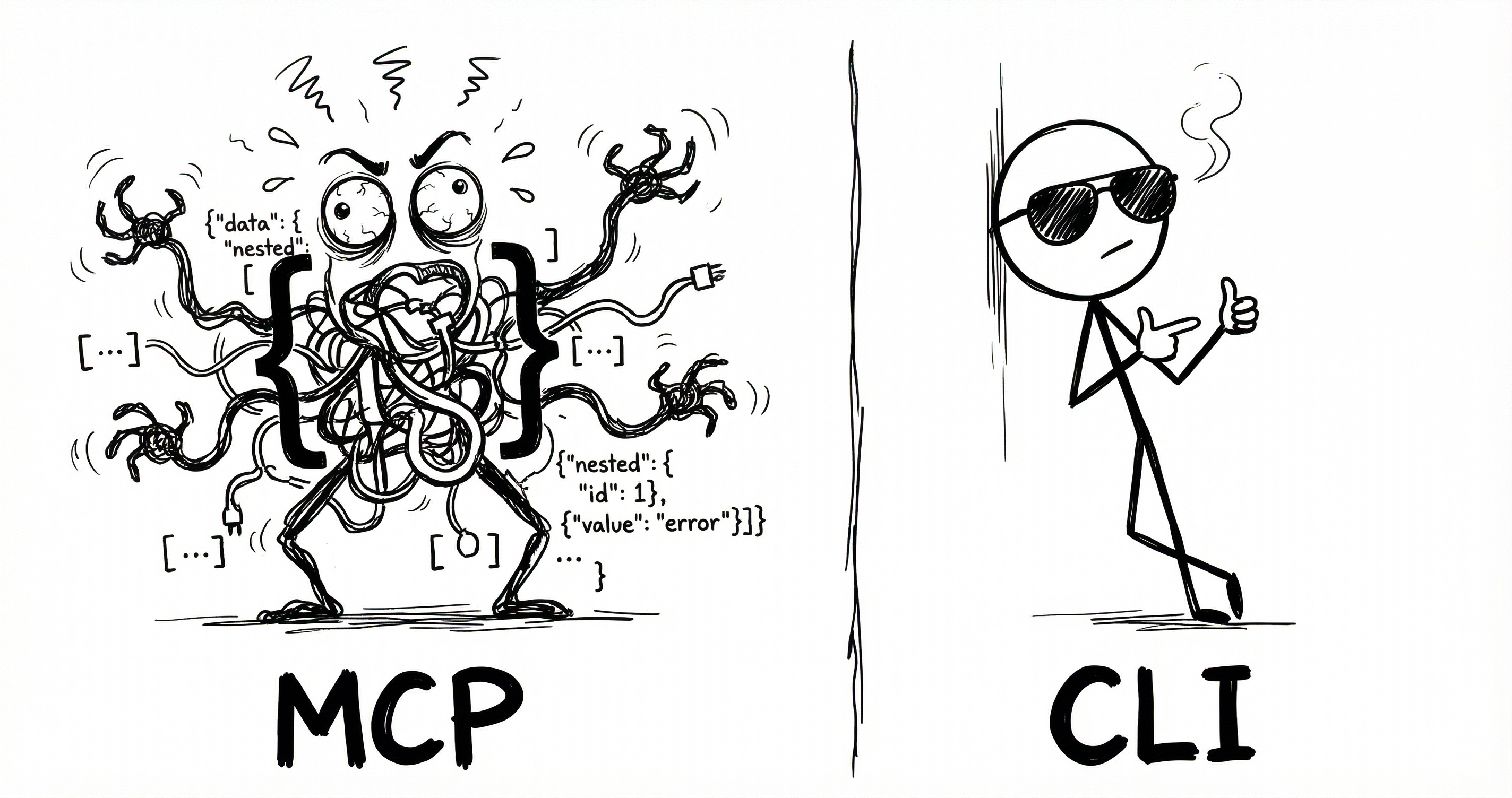

Manus takes a rather conservative approach to MCP usage. The dynamic tool discovery method of MCP can pollute the Action Space, leading to a decrease in cache hit rates. A lower cache hit rate can drastically increase costs. The proposed improvement is:

He also mentions that this was discussed in an Anthropic blog post: Code execution with MCP: Building more efficient agents. This article explains how to use a code execution environment to improve the efficiency of connecting AI agents with external systems via MCP (Model Context Protocol). MCP is an open standard designed to address the challenges of connecting AI agents to tools and data. The article points out that with the widespread adoption of MCP, direct tool invocation can lead to context window overload and intermediate results consuming excessive tokens. By presenting the MCP server as a code API, agents can manage context more effectively, reduce token usage, and improve efficiency.

https://cdn.openai.com/business-guides-and-resources/a-practical-guide-to-building-agents.pdf

Source: Dev.to