How Agentic Bas AI Turns Threat Headlines Into Defense Strategies

By Sila Özeren Hacioglu, Security Research Engineer at Picus Security.

For security leaders, the most dreaded notification isn't always an alert from their SOC; it’s a link to a news article sent by a board member. The headline usually details a new campaign by a threat group like FIN8 or a recently exposed massive supply chain vulnerability. The accompanying question is brief but paralyzing by implication: "Are we exposed to this right now?".

In the pre-LLM world, answering that question set off a mad race against an unforgiving clock. Security teams had to wait for vendor SLAs, often eight hours or more for emerging threats, or manually reverse-engineer the attack themselves to build a simulation. Though this approach delivered an accurate response, the time it took to do so created a dangerous window of uncertainty.

AI-driven threat emulation has eliminated much of the investigative delay by accelerating analysis and expanding threat knowledge. However, AI emulation still carries risks due to limited transparency, susceptibility to manipulation, and hallucinations.

At the recent BAS Summit, Picus CTO and Co-founder Volkan Ertürk cautioned that “raw generative AI can create exploit risks nearly as serious as the threats themselves.” Picus addresses this by using an agentic, post-LLM approach that delivers AI-level speed without introducing new attack surfaces.

This blog breaks down what that approach looks like, and why it fundamentally improves the speed and safety of threat validation.

The immediate reaction to the Generative AI boom was an attempt to automate red teaming by simply asking Large Language Models (LLMs) to generate attack scripts. Theoretically, an engineer could feed a threat intelligence report into a model and ask it to "draft an emulation campaign".

While this approach is undeniably fast, it fails in reliability and safety. As Picus’s Ertürk notes, there’s some danger in taking this approach:

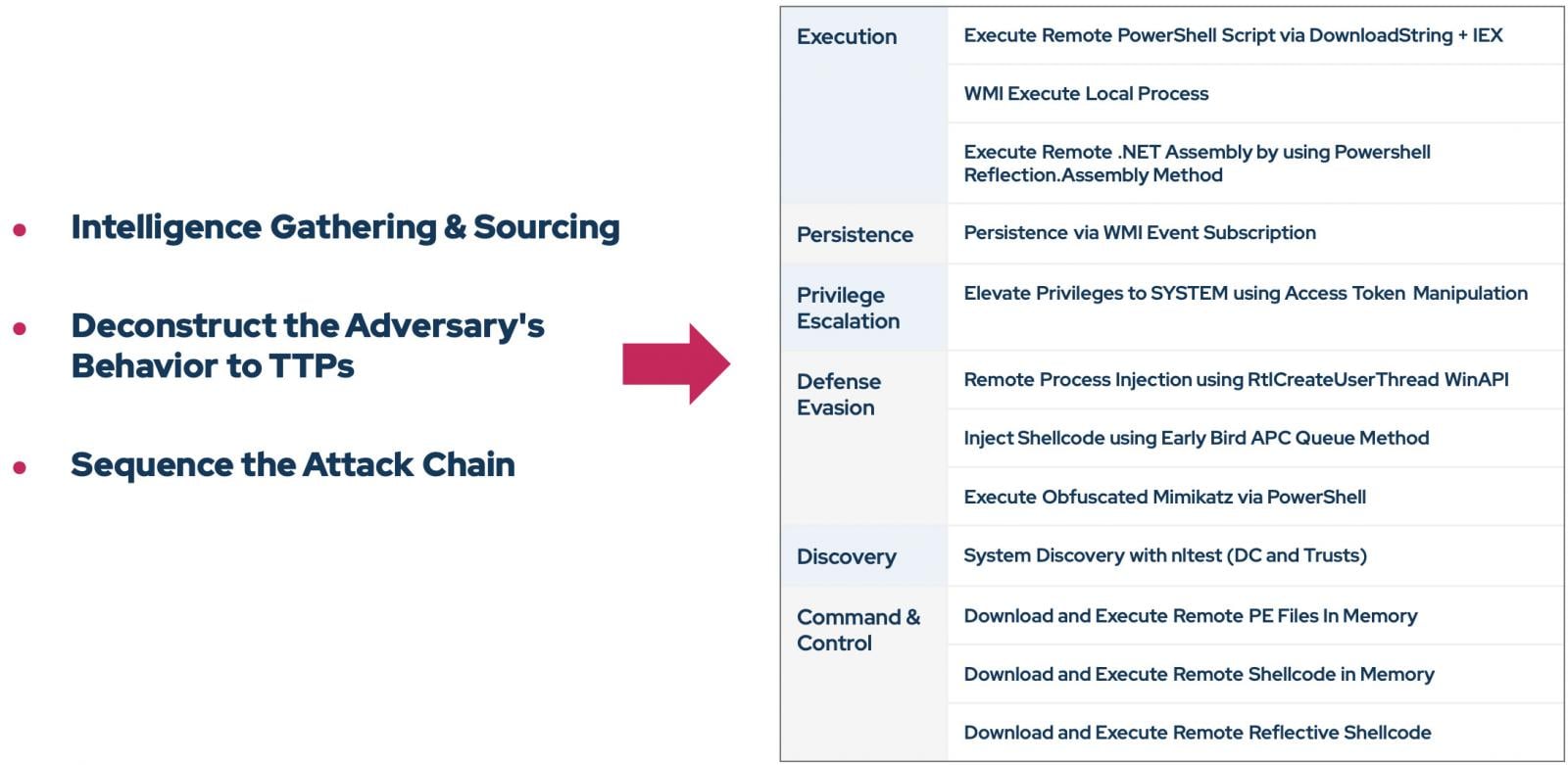

The problem is not only risky binaries. As mentioned above, LLMs are still prone to hallucination. Without strict guardrails, a model might invent TTPs (Tactics, Techniques, and Procedures) that the threat group doesn't actually use, or suggest exploits for vulnerabilities that don't exist. This leaves security teams struggling to validate their defenses against theoretical threats while not taking the time to address actual ones.

To address these issues, the Picus platform adopts a fundamentally different model: the agentic approach.

Source: BleepingComputer