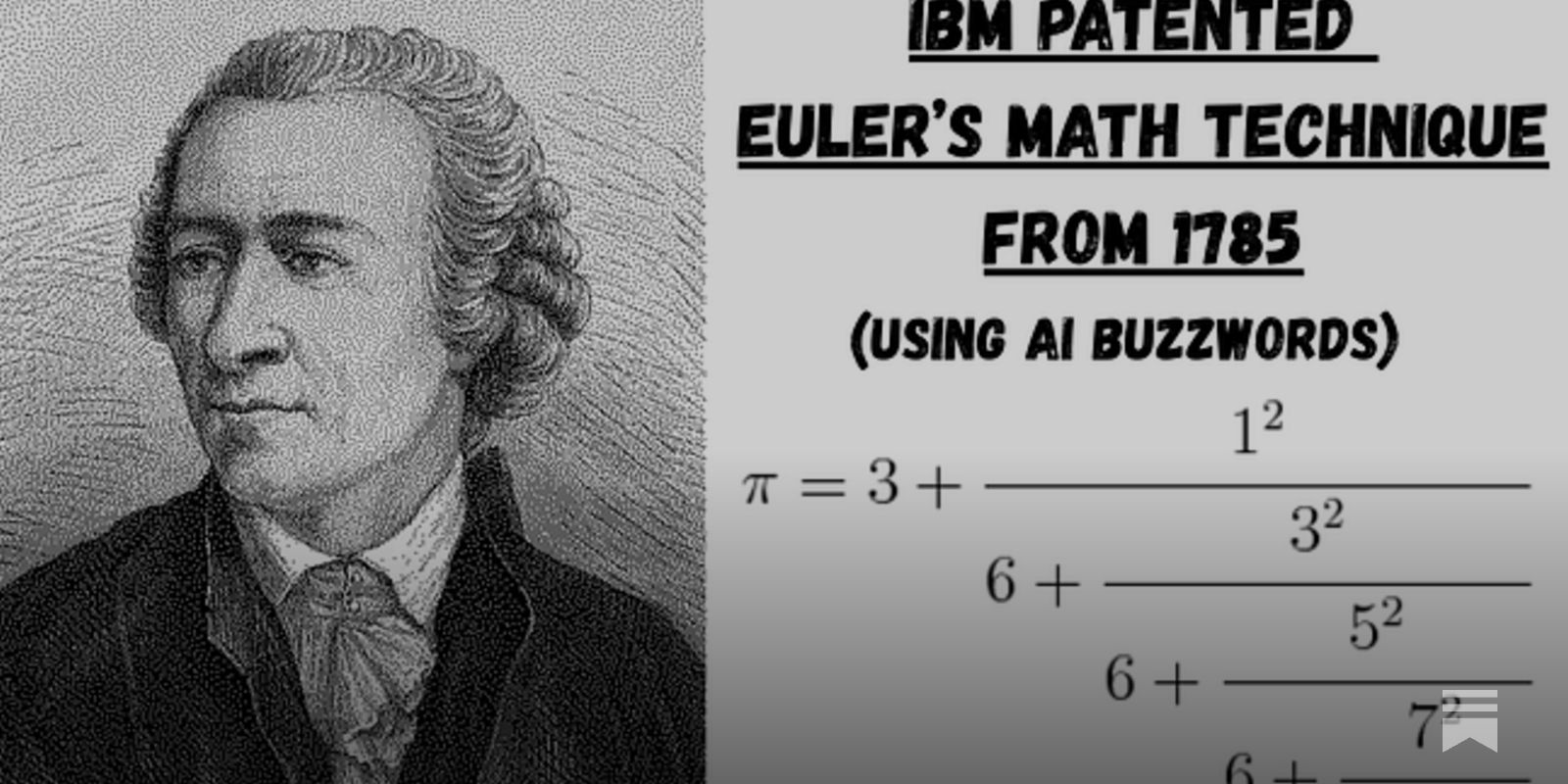

Ibm Patented Euler's 200 Year Old Math Technique For 'ai...

Hey, it’s Murage. I code, analyze papers, and prep marketing material solo at Leetarxiv. Fighting patent trolls was not on my 2025 bingo card. Please consider supporting me directly.

As always, code is available on Google Colab and GitHub.

The 2021 paper CoFrNets: Interpretable Neural Architecture Inspired by Continued Fractions (Puri et al., 2021)1 investigates the use of continued fractions in neural network design.

The paper takes 13 pages to assert: continued fractions (just like mlps) are universal approximators.

Ultimately, they take the well-defined concept of Generalized Continued Fractions and call them CoFrNets.

Honestly, the paper is full of pretentious nonsense like this:

Simple continued fractions are mathematical expressions of the form:

Continued fractions have been used by mathematicians to:

Achille Brocot, a clockmaker, 1861 used continued fractions to design gears for his watches

Even Ramanujan’s math tricks utilised continued fractions (Barrow, 2000)5

Source: HackerNews