Multi-provider Llm Orchestration In Production: A 2026 Guide

Have you ever seen your app crash because an AI API went down? It is a total nightmare. As of January 2026, relying on just one AI model is a huge risk for any business. I have spent over seven years building enterprise systems and my own SaaS products. I have seen firsthand how fragile a single-point setup can be.

At Ash, I focus on building systems that just do not break. You need a way to switch between models like Claude and GPT-4 instantly. This is just why multi-provider LLM orchestration in production is so important. It keeps your app running even when a major provider has a bad day. You can learn more about the basics of Natural Language Processing to see why these models vary so much.

In this post, I will show you how to set this up the right way. You will learn how to save money and improve your app's speed. We will look at the best tools for the job and how to avoid common traps. Let's make your AI features reliable and fast.

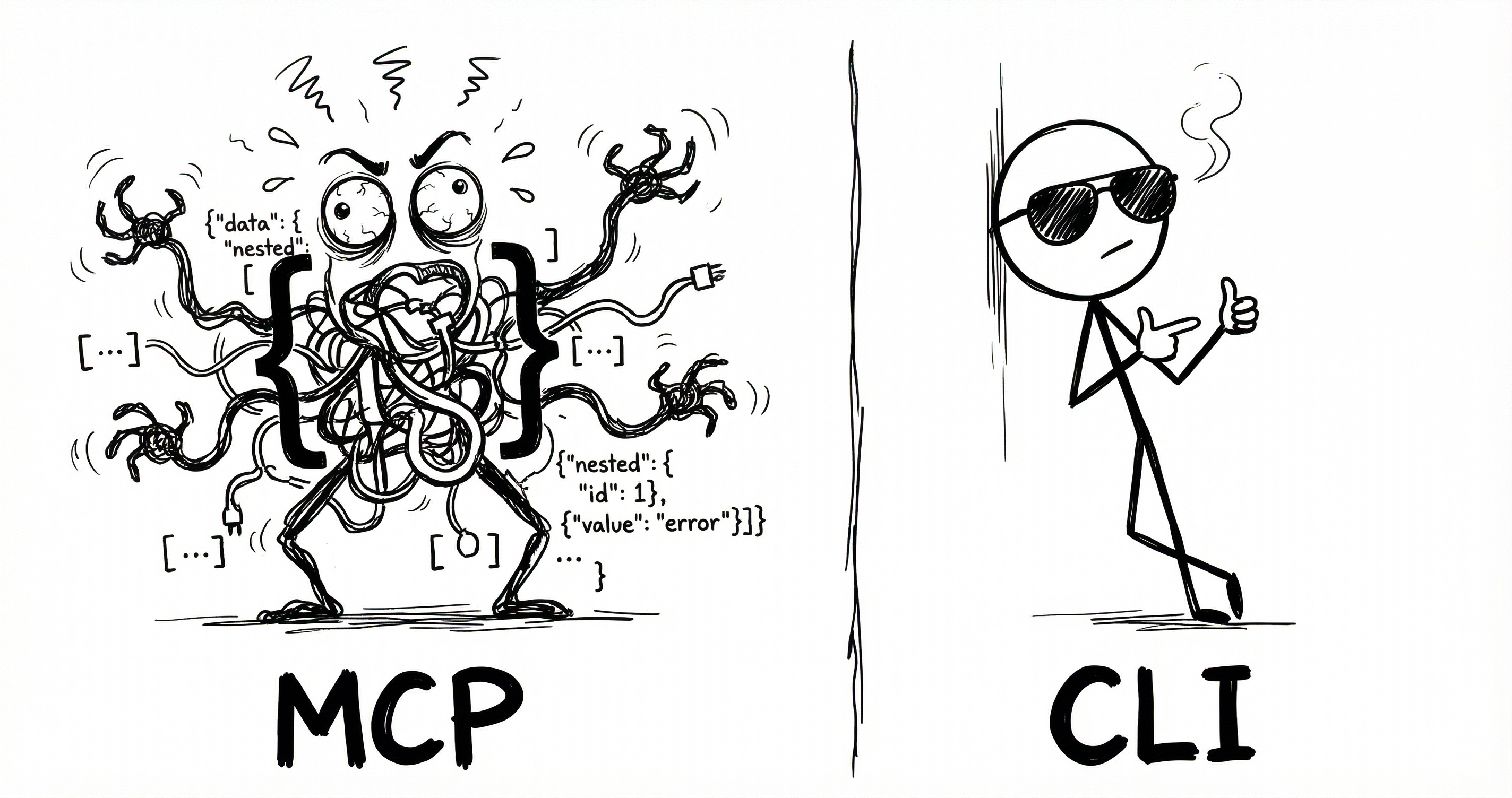

Think of this as a traffic controller for your AI requests. Instead of sending every prompt to one place, you use a smart layer in the middle. This layer decides which model should handle the task. It might send a simple question to a cheaper model. It might send a complex coding task to a more powerful one.

This setup is about more than just having a backup. It is about using the right tool for every single job. In 2026, we have so many great options like Gemini, Claude, and GPT-4. Each has its own strengths and weaknesses.

Key parts of this setup include: • The Router: This is the logic that picks the best model for your specific prompt. • The Gateway: A single entry point that handles login for all your providers. • The Fallback Logic: A system that on its own tries a second provider if the first one fails. • The Load Balancer: A tool that spreads requests across different regions to keep things fast.

The biggest reason to do this is reliability. If OpenAI has an outage, your app stays online by switching to Anthropic. I have seen companies lose thousands of dollars in minutes because their only AI provider went down. Most teams see a 99. 99% uptime when they use more than one provider.

Cost is another huge factor. Some models are much cheaper for simple tasks. By routing easy prompts to smaller models, you can save a lot of money. Many of my projects have seen a 30% reduction in API costs after setting this up.

Benefits you will notice fast: • No vendor lock-in: You can move your traffic whenever a provider cha

Source: Dev.to