New You Should Write An Agent 2025

Some concepts are easy to grasp in the abstract. Boiling water: apply heat and wait. Others you really need to try. You only think you understand how a bicycle works, until you learn to ride one.

There are big ideas in computing that are easy to get your head around. The AWS S3 API. It’s the most important storage technology of the last 20 years, and it’s like boiling water. Other technologies, you need to get your feet on the pedals first.

People have wildly varying opinions about LLMs and agents. But whether or not they’re snake oil, they’re a big idea. You don’t have to like them, but you should want to be right about them. To be the best hater (or stan) you can be.

So that’s one reason you should write an agent. But there’s another reason that’s even more persuasive, and that’s

Agents are the most surprising programming experience I’ve had in my career. Not because I’m awed by the magnitude of their powers — I like them, but I don’t like-like them. It’s because of how easy it was to get one up on its legs, and how much I learned doing that.

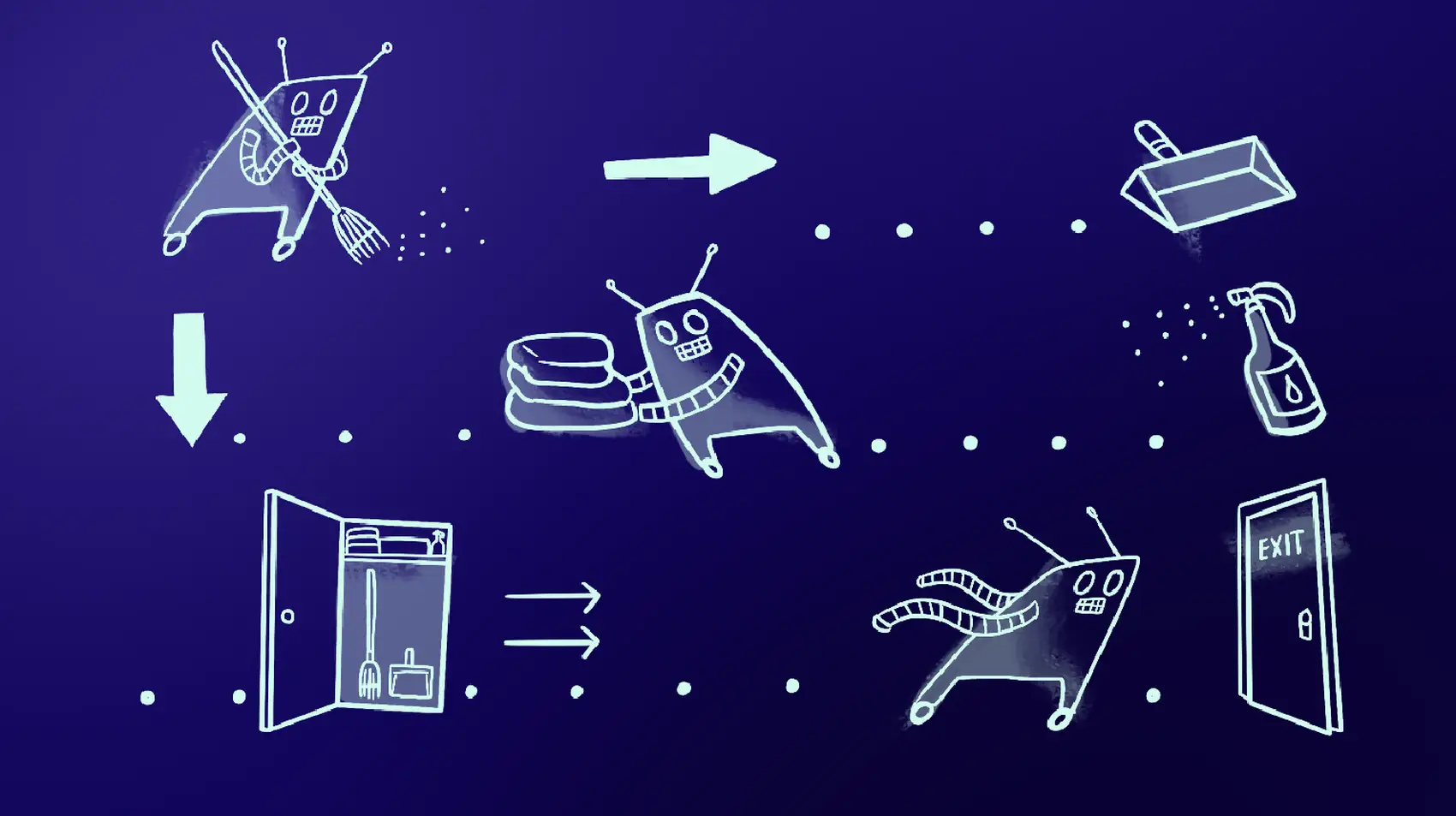

I’m about to rob you of a dopaminergic experience, because agents are so simple we might as well just jump into the code. I’m not even going to bother explaining what an agent is.

It’s an HTTP API with, like, one important endpoint.

This is a trivial engine for an LLM app using the OpenAI Responses API. It implements ChatGPT. You’d drive it with the the obvious loop. It’ll do what you’d expect: the same thing ChatGPT would, but in your terminal.

Already we’re seeing important things. For one, the dreaded “context window” is just a list of strings. Here, let’s give our agent a weird multiple-personality disorder:

A subtler thing to notice: we just had a multi-turn conversation with an LLM. To do that, we remembered everything we said, and everything the LLM said back, and played it back with every LLM call. The LLM itself is a stateless black box. The conversation we’re having is an illusion we cast, on ourselves.

Source: HackerNews